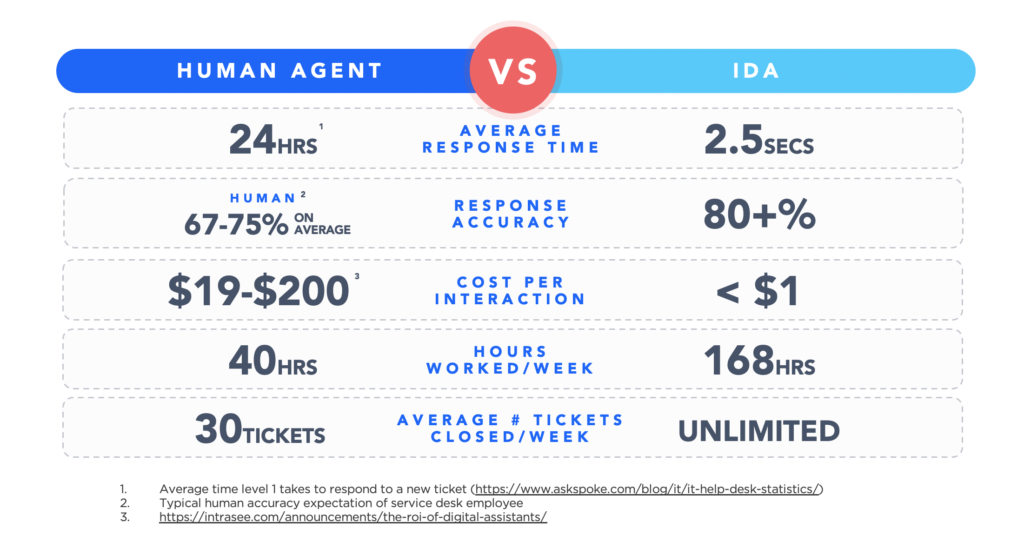

Every industrial revolution has been defined by increased efficiency and reduced costs. The new digital revolution we are embarking upon is no different. Things that took days to do can now be done in seconds, and things that used to cost hundreds of dollars can now be accomplished by spending less than one dollar.

Conversational AI is cool, but that’s not why it will change the world. It will change the world because it will be better and cheaper than many of the things we pay humans to do today.

In this blog we will focus on the impact of digital assistants in the world of human resources (HR). And how it will change how organizations can service requests and questions from employees and managers in a way that reduces organizational costs and improves the level of service. We will therefore break down the two areas that should result in large reductions in operating costs: the HR help desk and HR staffing levels.

What you will see is that even the most conservative approach to saving costs with a digital assistant will realize between a 10%-30% reduction in help desk and HR costs in one year. And that can be doubled in two years. Plus, you’ll be providing better service to your employees and managers too!

As Larry Ellison pointed out last year at Oracle OpenWorld. It’s not the software that is the most expensive item, it’s the cost of all the people who have to deal with all the ramifications of running the software.

1. HR Help Desk Costs

It has been said that help desks are the cost of (a lack of) quality. Scattered, and often misleading, information and complex processes inevitably force employees to reach out to live agents to help them solve their problems, answer their questions, or complete a task. Help desks are, often, the cost organizations pay for failures elsewhere in their internal systems.

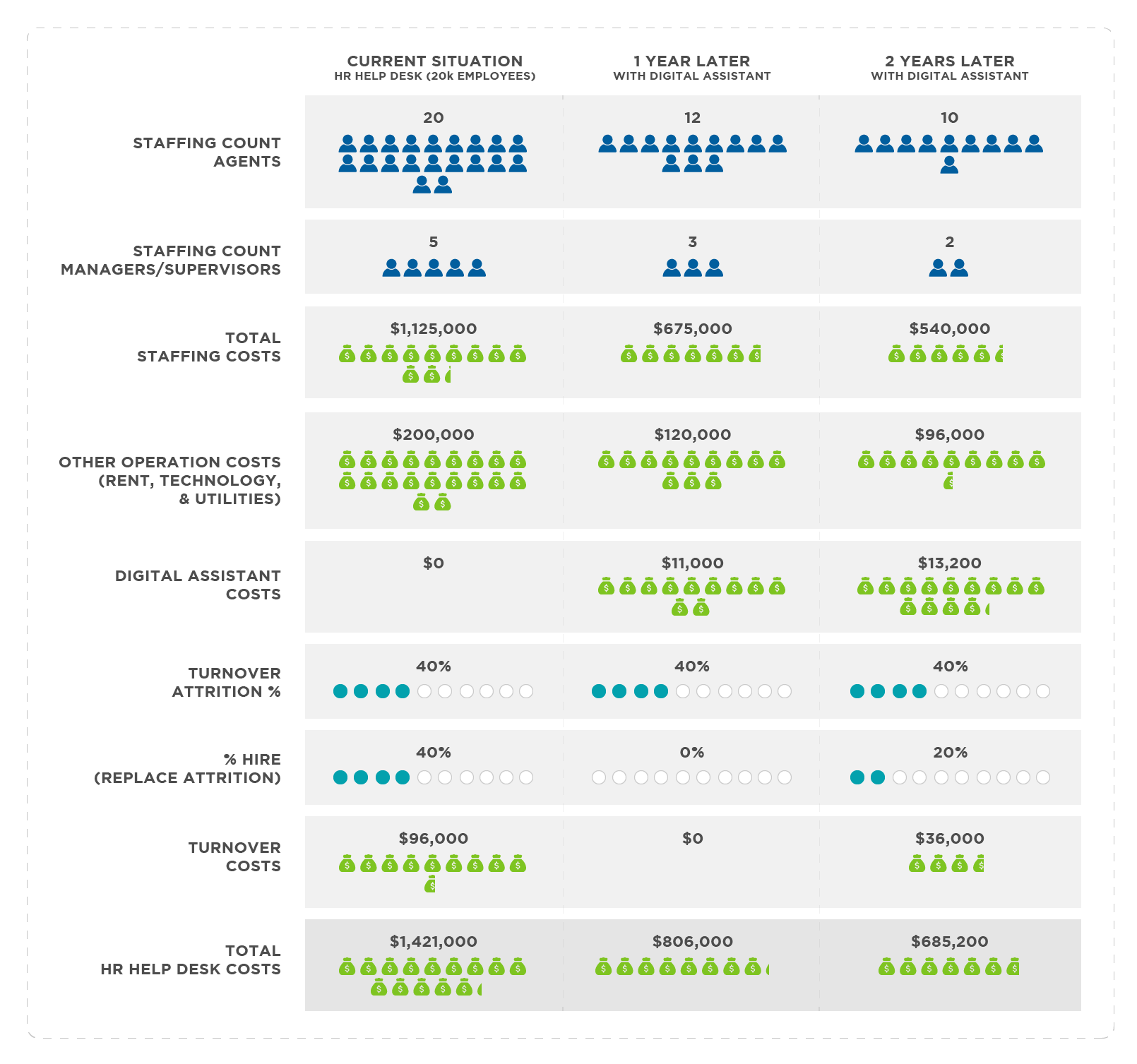

So, let’s break down the staffing costs of a help desk in order to drive to an expected cost saving:

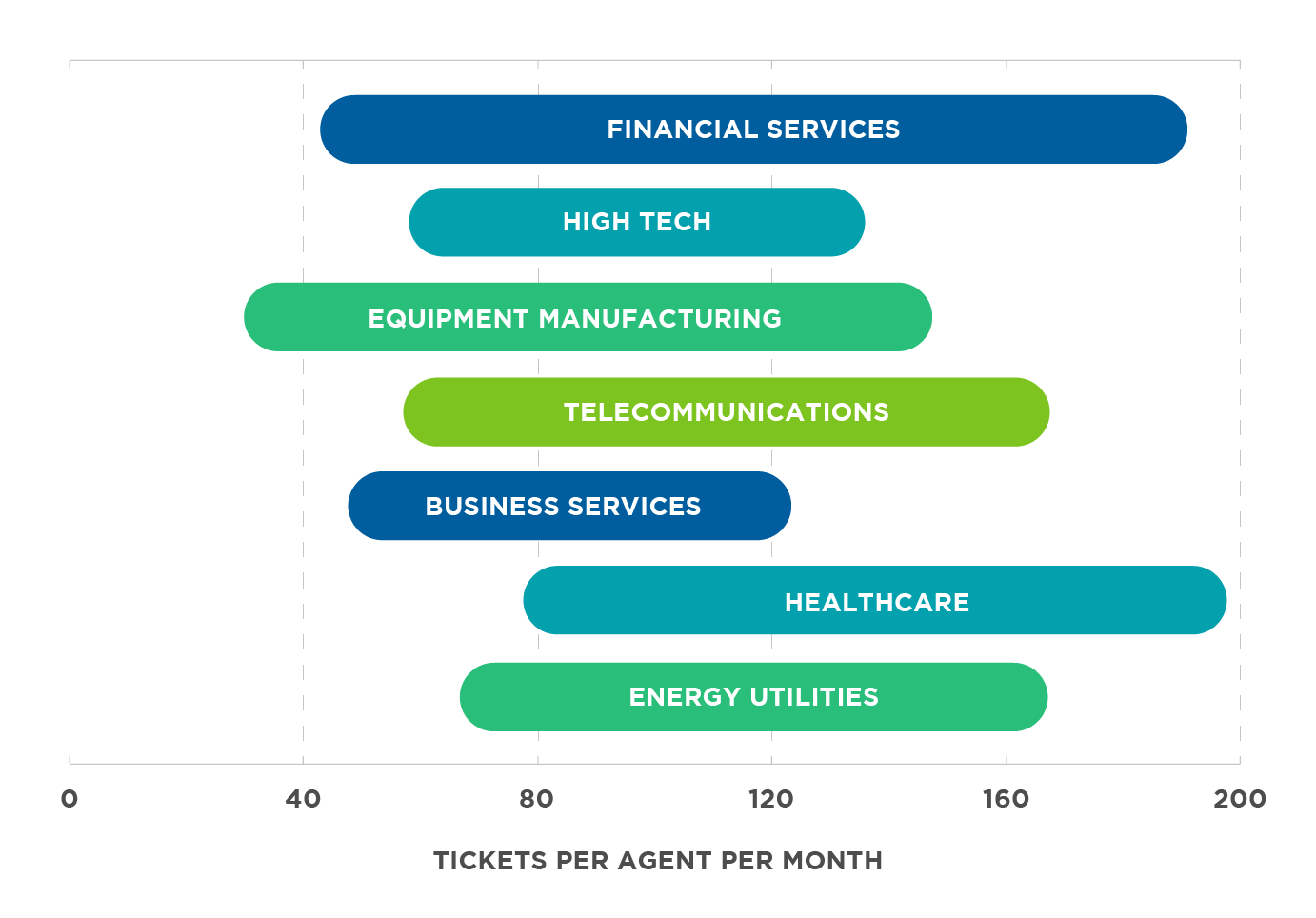

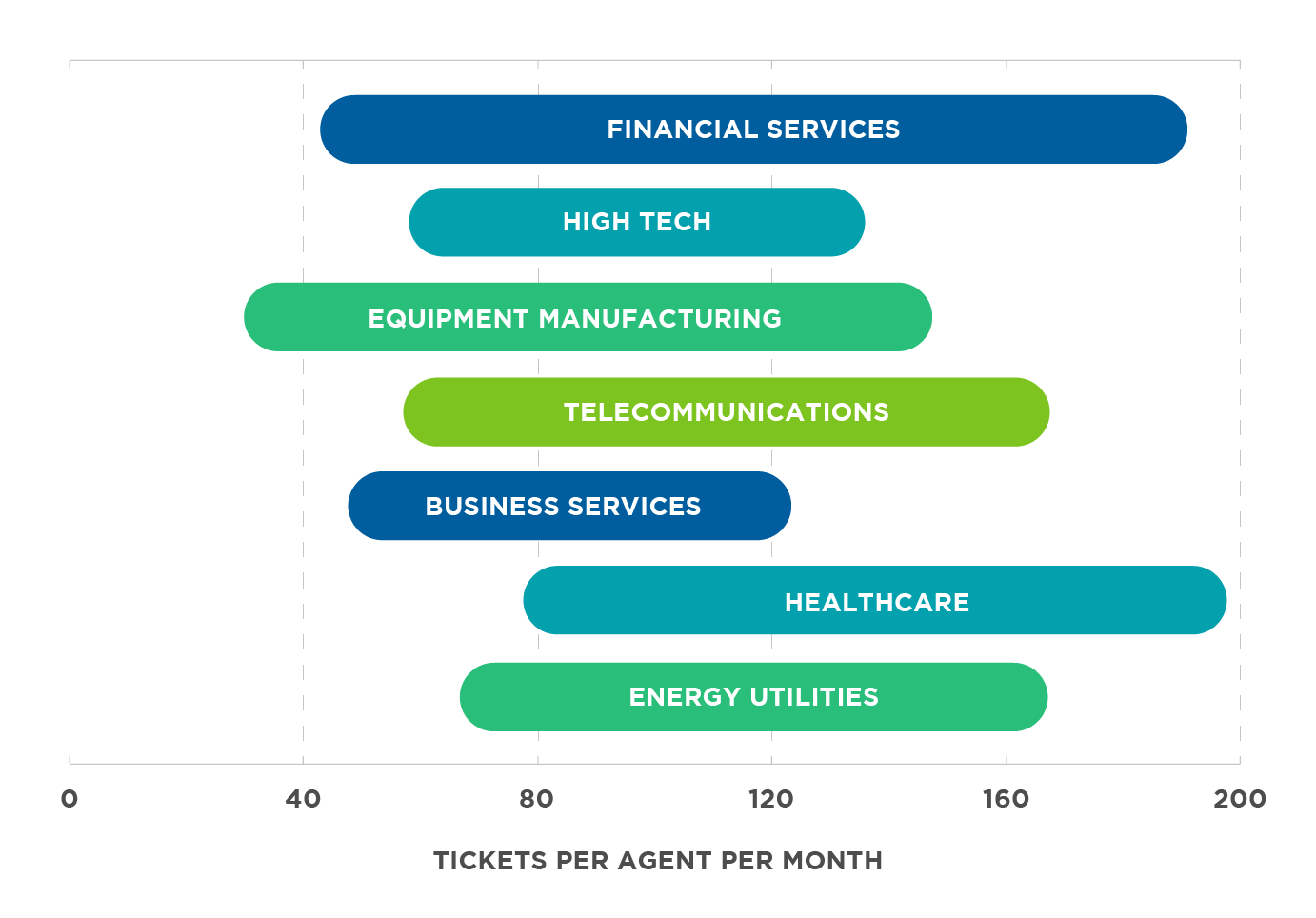

The average number of service agents per 1,000 seats ranges from 5.4 in the healthcare industry to 21.9 in the financial services industry. This is the metric that defines the staffing levels of an organizations help desk. 10 per 1000 would be a conservative average number across all industries, and so we will use that for the model in this exercise.

This means that for an organization with around 20,000 employees, the number of agents is around 20. In North America the average salary for a service desk analyst is $41,000. Multiple that by 20 and you get $820,000 per year. But that in itself is not the complete picture.

The ratio of agents to total service desk headcount is a measure of managerial efficiency. The average for this metric worldwide is about 78%. What this means is that 78% of service desk personnel are in direct, customer facing service roles. The remaining 22% are supervisors, team leads, trainers, schedulers, QA/QC personnel, etc. And those people are even more expensive. This takes the headcount up to 25.

The average salary for a service desk supervisor is $61,000 and the average for a service desk manager is $75,000. Which means that those extra 5 people push the staffing costs up by at least $305,000. Driving the total cost of salaries staffing the service desk for an organization of 20,000 up to $1,125,000. And then when you factor in utilities, technology and facility expenses this raises the number to over $1,325,000.

And one final statistic to keep in mind. While the average overall employee turnover for all industries is 15%, inbound customer service centers have a turnover rate on average of 30-45%. It should come as no surprise that service center turnover is at least double what you’d see in other businesses.

Based on age, the differences are stark: workers age 20-24 stay in the job usually just 1.1 years, while workers 25-34 stay 2.7 years on average.

And the key metric here is that it costs on average around $12,000 to replace agents that leave. Why? The costs of turnover include the following:

- Recruiting

- Hiring time (HR time, interview time)

- Training, including materials and time

- Low-productivity time when employees first start out

- Supervisory time

- Overtime (remaining staff may have to cover extra shifts)

So, going back to our original metric of 20 service desk agents, if 40% leave each year, that equals 8 annual replacements at a total turnover cost of 8*$12,000=$96,000.

So, as a grand total, an organization of 20,000 employees has to pay an annual cost of around $1,421,000 year to staff their service desk.

In terms of how cost per ticket is calculated (a key metric), this also depends on the number of tickets closed per agent per year. Again, this varies a lot by industry.

Figure 1: Tickets closed per agent per month

The average number of tickets closed per month per agent is around 120 cross-industry. So, per year it is 1,440. Which means that with 20 agents the expected number of cases closed (not always successfully) is 28,800.

This means that the average cost per service ticket is around the $49 mark ($1,421,000 / 28,800) if you take into account a broader range of costs than just service agent salaries. So, while generally published average costs per ticket are estimated to be around $19-$20 per ticket, the true cost is much higher, but with massive variance based on industry.

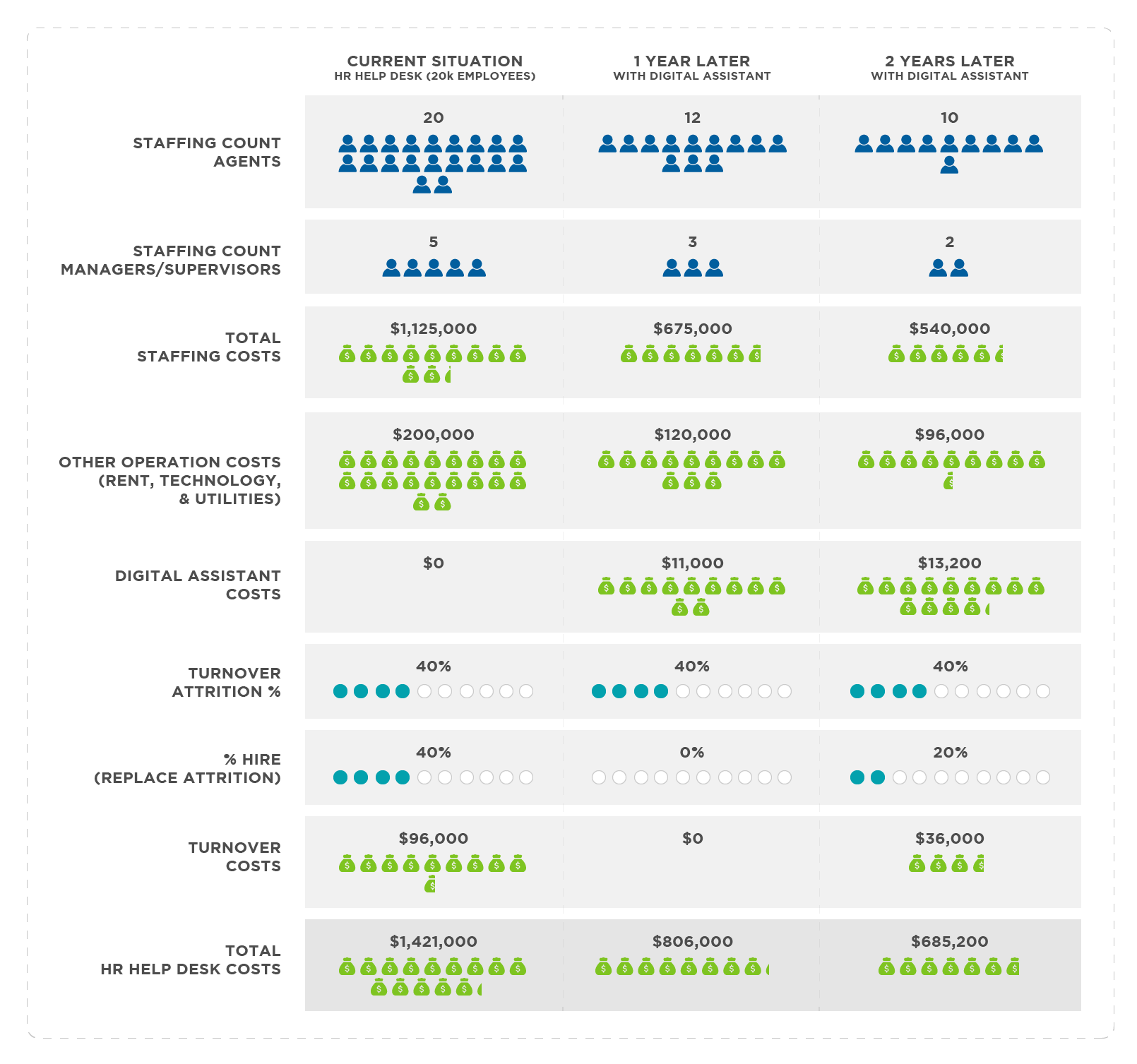

The good news is that the actual logistics around achieving ROI are therefore pretty straight forward. Instead of hiring 40% new staff every year due to attrition, just have the digital assistant pick up the slack and do not hire any new staff. This immediately saves your organization $96,000 in turnover/onboarding costs. Plus allows you to drop the salary costs by around $450,000 (40% of a $1,125,000 payroll).

It also allows you to reduce other costs associated with your help desk. Utilities, technology costs, and facility space (you can downsize based on the reduced headcount).

For a digital assistant, the average cost per ticket is less than $1. Which means that if you replaced 40% of your help desk calls with digital assistant calls, this would result in digital assistant costs of less than $11,000. Factor in a reduction in headcount and other expenses, plus a hiring freeze, and you would see an overall reduction in costs from $1,421,000 to $806,000 in just one year (see diagram below). And even greater savings after two years.

Figure 2: HR Help Desk ROI using a Digital Assistant

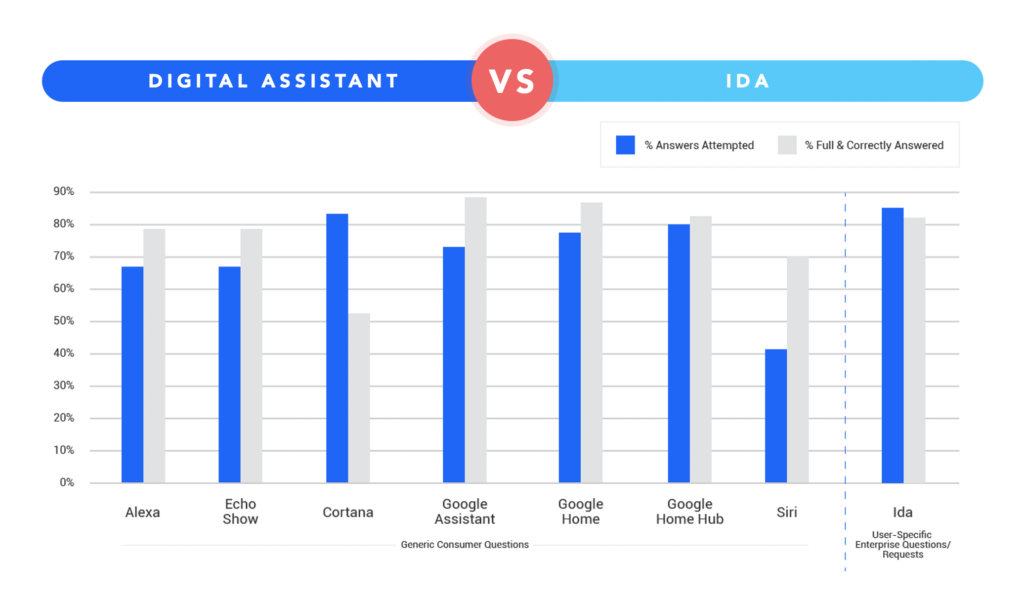

Also, and just as importantly, the quality and accuracy of the digital assistant will continue to increase each subsequent year and will not plateau (as it does with humans). This is due to two factors:

- Digital assistants don’t leave your organization. There is zero turnover.

- Digital assistants benefit from machine learning. The more they see and the better training they are given, the more accurate they get. As an investment, they are a win-win all round. You teach them something once, and they remember forever. And they’ll never leave you or call in sick. And they’ll work 24/7, 365 days of the year. And can even speak multiple languages.

But this is not where the story of ROI ends, it’s really where it begins. Help desks are really designed to handle the easy, first level stuff. Once you get to the next level (where the agent can’t handle the ticket because it’s too complex for them), the costs are in the hundreds of dollars per ticket as you are now dealing with a more expensive level of staffing and more minutes required to solve the problem or meet the request. This is where HR staffing levels come into play.

2. HR Staffing Costs

In the world of HR, HR experts handle many of the day to day HR activities and employee/manager requests in an organization. In the same way that there are agent staffing levels per industry, there are also HR staff to employee ratios too. And this ratio does vary per industry. Typically, the more complex the organization the higher the staffing level. But size matters too. There are economies of scale that kick in once an organization gets really big. But being global, having a mix of full time and part time employees, union and non-union, blue collar and white collar, will dictate higher ratios than a company where most people fit a similar profile.

But this does not mean that the ratio is stuck and cannot be changed. There is one key aspect of the staffing ratio that is in complete control of HR, and, therefore, has a huge capacity for change. And by change we mean reduced!

The role of HR is a key variable factor that influences the HR staff to employee ratio. A highly operational HR department will do different work and require a larger HR workforce compared to a highly strategic HR department. So, what specifically does this mean? How can HR move from being mostly operational to being mostly strategic (a much more fun and productive role btw).

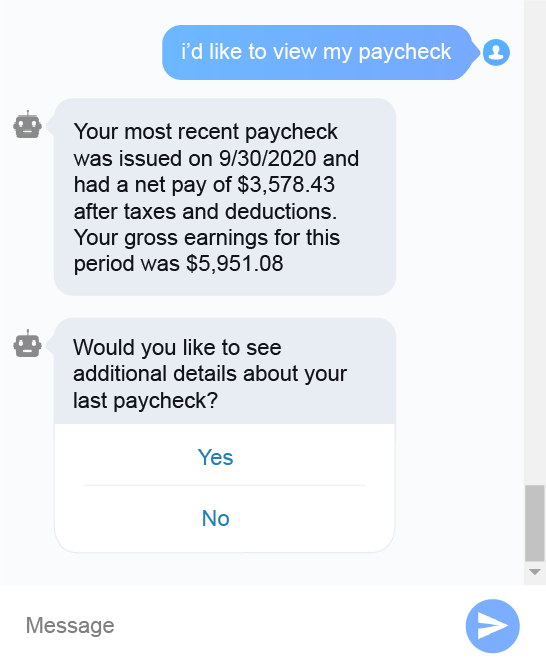

The answer is to move traditional HR admin tasks from humans to a digital assistant. HR admin work is probably the least popular thing that any highly educated and highly paid HR expert has to do, so removing this onerous work from their plate is a good thing!

Running reports, answering requests for data, following up with managers to ensure key tasks were performed, entering data into the HCM system. These are all repetitive operational tasks that can be automated and handled by a digital worker.

All this stuff is boring and repetitive to humans, and it takes a lot of time. But to a digital assistant it is fun and can be done extremely quickly. And the “right” digital assistant, with the proper skillset and training, can do almost all the HR administrative tasks that an HR expert can do. Often better, as they don’t forget obscure details and business rules, they don’t make mistakes, and they bring their “A” game every single second of the day. And, as stated before, they don’t leave your organization, turnover is zero, so wisdom is accumulated and not lost via natural attrition.

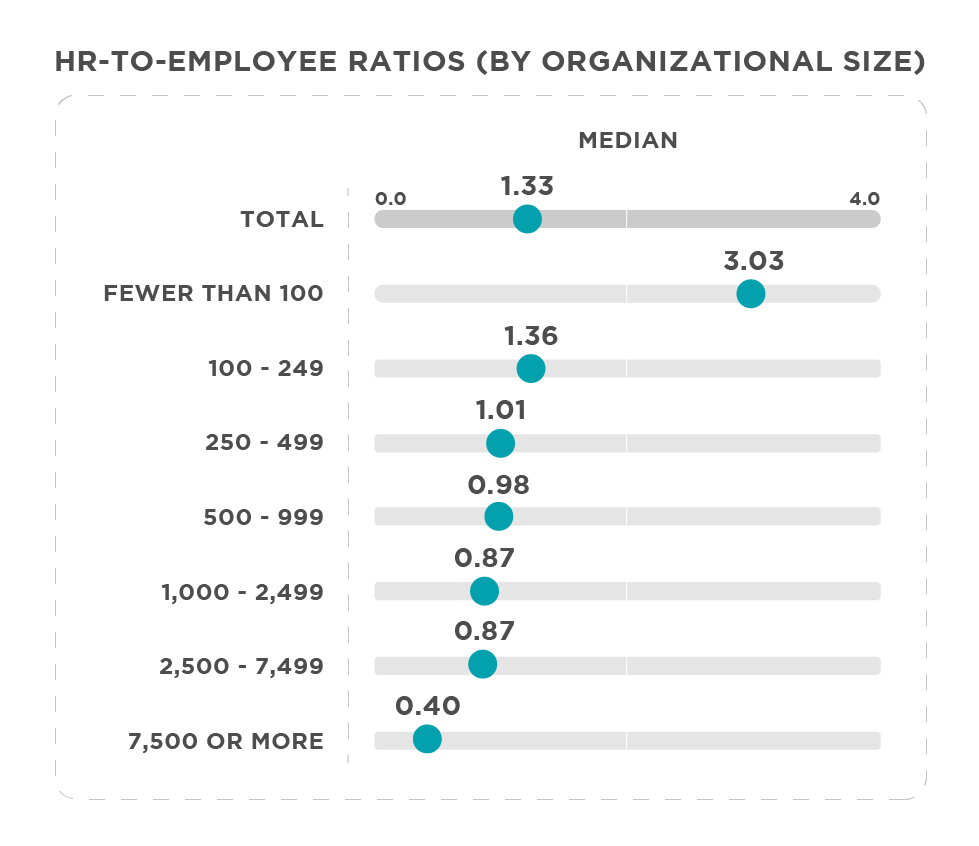

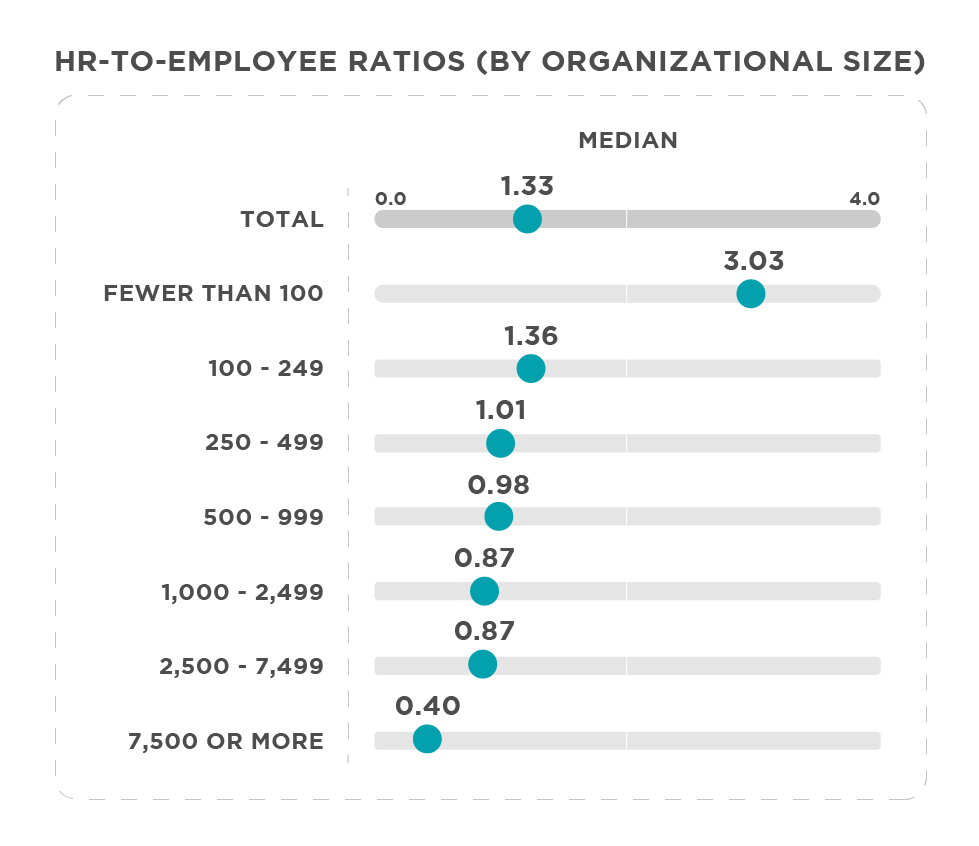

So let’s get into the math of the ROI. Bloomberg Law’s 2018 HR Benchmarks Report states that HR departments have a median of 1.5 employees per 100 people in the workforce. At the time, this represented an all-time high as it had long been around 1.0 per 100. Both the Society for Human Resource Management (SHRM) and Bloomberg numbers were very similar, so this number is considered very accurate.

SHRM also noted a clear reduction in the ratio based on organization size (an economy of scale). However, as the size of the company rises, so does the average compensation to HR staff (which explains why published averages are very misleading). Working in HR for a large company can be twice as financially rewarding as for a very small company. The reason being complexity (on many levels). If you want HR people who understand complex organizations then you have to pay a premium.

Figure 3: HR staff to employee ratio’s cross-industry

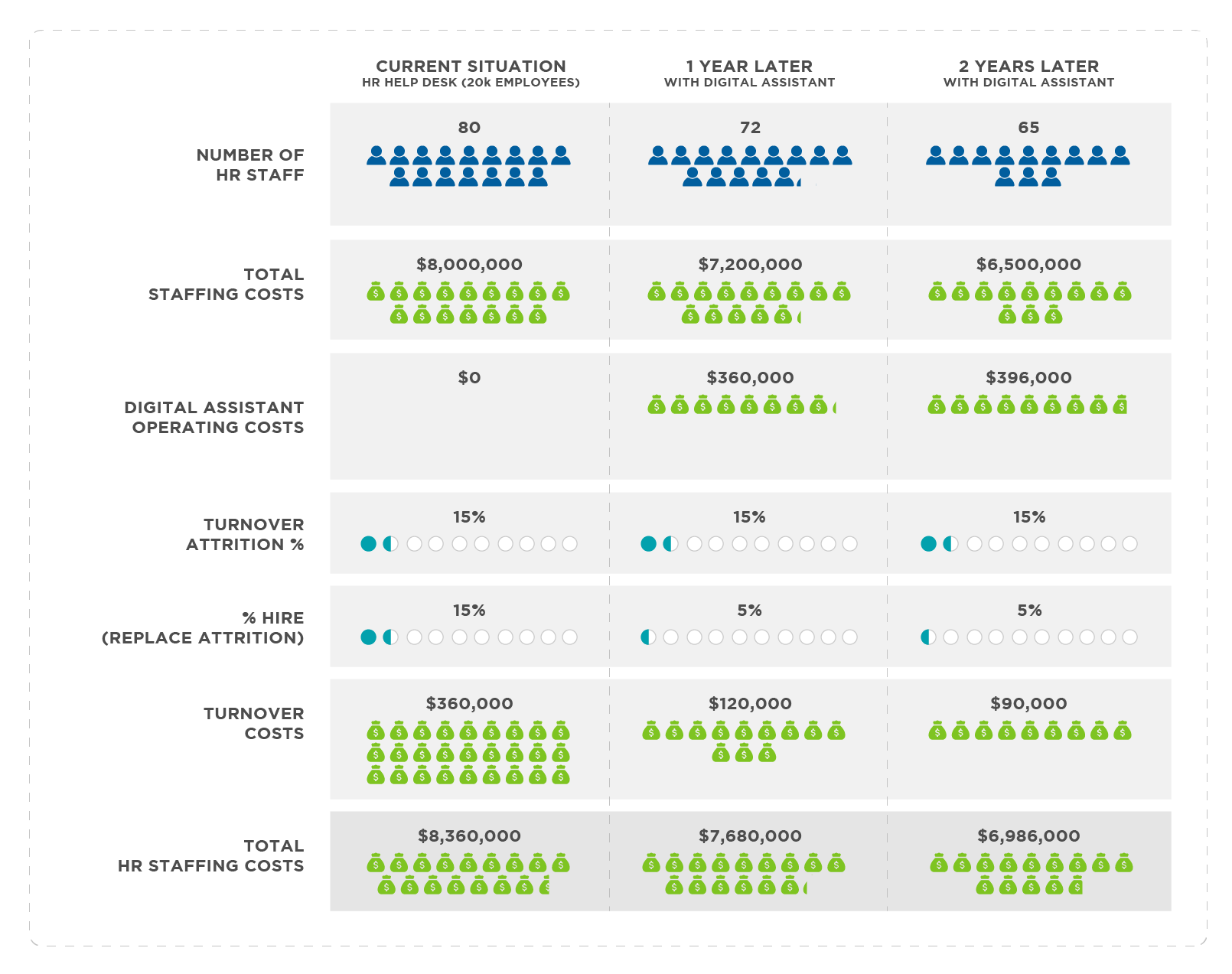

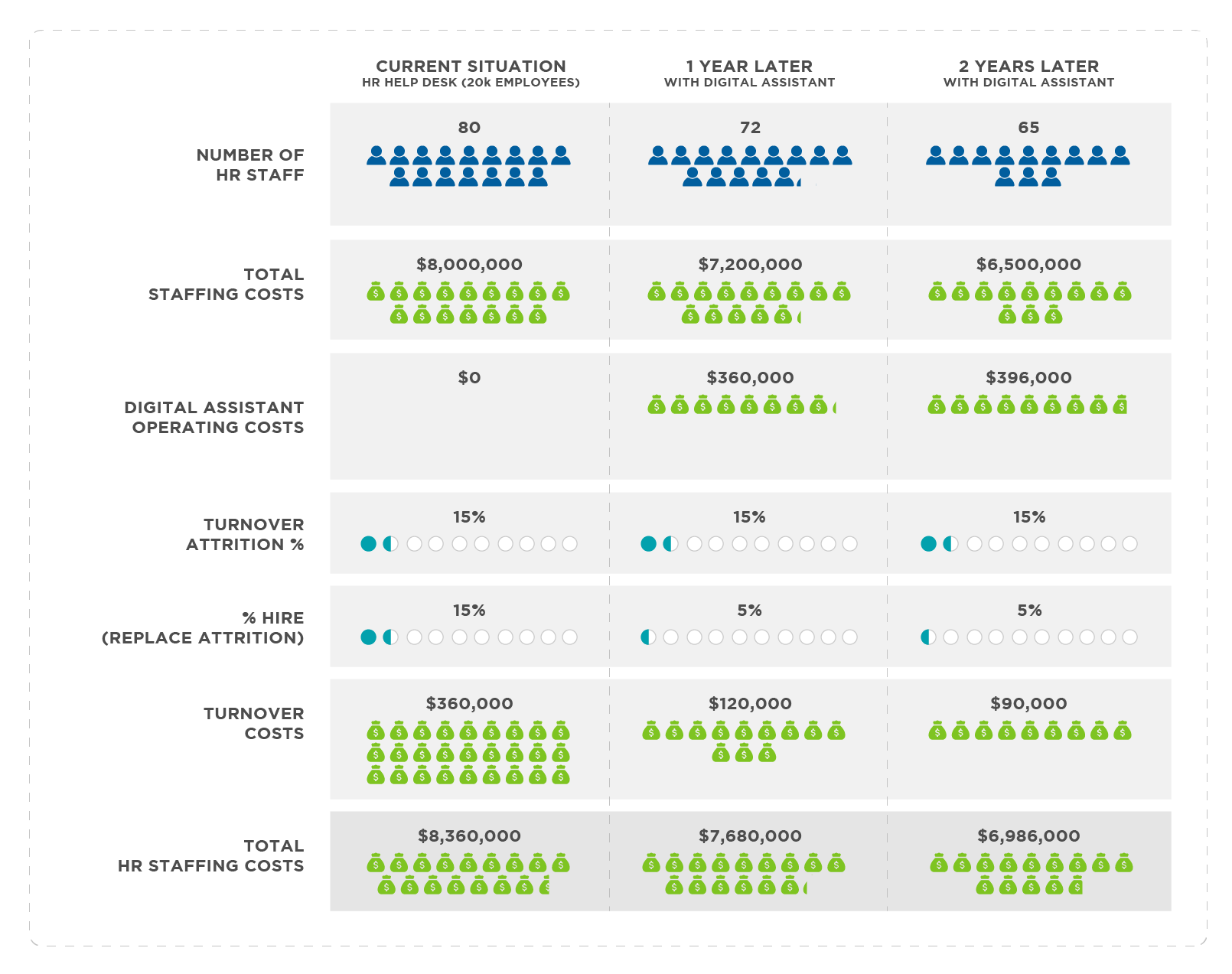

Using our example of an average organization with 20,000 employees and ratio of 0.4 HR staff for every 100 employees, the HR staffing level would be around 80. At this size of an organization the average level of HR compensation would be around $100,000. Making the total spend equal to $8,000,000 per year. Note: that’s a lot more than the $1,125,000 spent on the service desk salaries.

In the world of HR staffing, turnover is more inline with other industries, around 15% per year. Though the cost of hiring HR staff is much higher than the $12,000 for service agents. For HR Staff it costs roughly $30,000 to replace the turnover (recruiting, interviewing, training, etc.). So, in our example, 12 new staff are required every year at a turnover cost of $360,000. Making the total annual cost equal to $8,360,000.

The big question then is how much of this work can be taken over by a digital assistant? The answer isn’t quite as clear as with the service desk. It all depends on the skillset of the digital assistant, and HR taking a proactive approach to how it replaces natural attrition of HR staff.

But the expectation, and based on the results of early projects, is that for the best digital assistants it is at least 10-30% of HR admin work that can be transferred from HR staff to the digital assistant. But that is just for 2020. This number should leap forward in bounds each year for the top digital assistant performers.

Using a conservative approach, if a company decided to hire just 5% new HR staff each year instead of the usual 15%, and used the digital assistant to pick up the slack of the 10% of the positions left unfilled, the savings would still be considerable. Let’s examine the resulting cost savings and see how this looks in detail.

In this scenario, HR costs would reduce in year one from $8,360,000 to $7,680,000 as the new staffing level would drop from 80 to 72 (12 people would leave and only 4 new people would be hired). While at the same time digital assistant operational costs would amount to roughly $360,000 to cover the slack. So, the total net saving in year one would be $680,000 (excluding implementation and configuration costs). But with a huge potential for much bigger savings in future years.

Implementation and configuration costs for a digital assistant that could handle both help desk and HR admin tasks would likely cost in the realm of $100,000 to $250,000 to implement. But this would be a one-time fee and would result in a year one total saving of HR staffing costs between $800,000 to $900,000.

Year two would see greater savings, as there would be no implementation costs, and the new hire rate would again be set at (a maximum) 5%. Taking the HR staffing level from 72 to 65.

Year two HR staffing costs would therefore be $6,500,000. With a turnover cost of $90,000 (10 people would leave and only 3 new people would be hired), giving a grand total of $6,590,000. Because the digital assistant would be taking on more work in year 2, that cost would rise to $396,000. Which would result in a total cost in year 2 of $6,986,000 (see graph below for details).

Figure 4: HR Staffing ROI using a Digital Assistant

In summary, correct implementation of a digital assistant solution that can handle both HR help desk AND HR admin requests is by far the best approach to achieve maximum ROI. Done effectively it will also realize superior service levels by providing faster and more accurate turnarounds for your entire workforce in a way that is far more convenient for them.

Welcome to the world of high ROI, and welcome to the next industrial revolution. It’s ready and available now.

Contact Us