In the burgeoning field of Generative AI, misconceptions are as common as the vendor emails flooding your inbox touting it as the panacea for modern business woes. Is that amazing demo you saw real? Is it ready for production use? How much time and money will it take? In this blog, we’re setting the record straight on the myths prevalent in today’s discourse, particularly from the perspective of enterprise customers using chatbots to understand language and produce answers.

Understanding Traditional AI

Before we examine Generative AI, it is important to understand traditional machine learning AI and how it differs from Generative AI. ML/AI works by imitating the human brain through a digital representation of the brain’s neural network. In short, it establishes groupings of patterns that allow it to handle an input it has never seen before and produce the expected output.

These models are trained by giving the AI examples of inputs and the expected output. The model can then use inference to determine which output should be delivered. In natural language processing (NLP), these inputs are mapped to Intents, and every Intent is defined separately, along with the output the Intent should generate.

How is generative AI different?

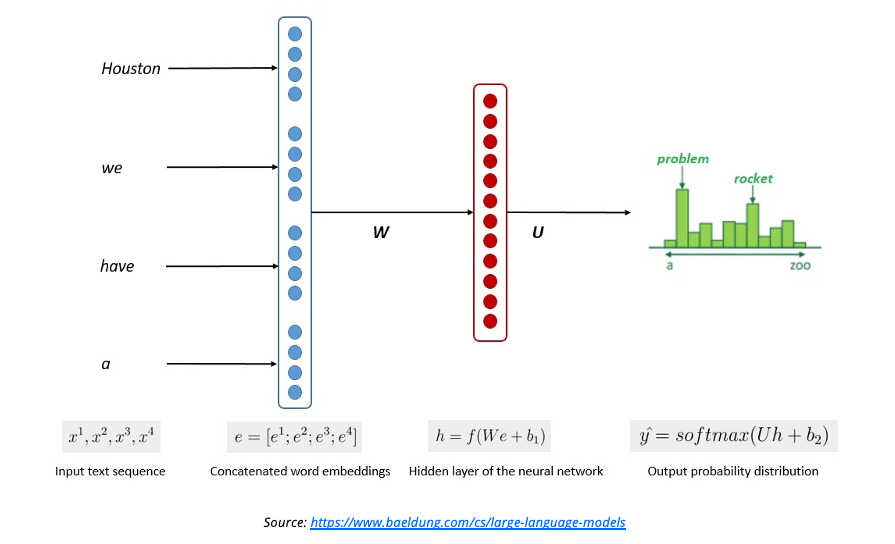

Generative AI popularized by models such as GPT diverges from predefined intents and outputs, instead generating responses in real-time based on vast training datasets and complex word-order probabilities. The magic of Generative AI isn’t sorcery but sophisticated mathematics. To do Generative AI well, large language models (LLMs) train billions of parameters at a cost of up to $100 million per training pass. As such, no organization looking to use Gen AI for their internal operations will take that cost on themselves; rather, they will just pay a Gen AI provider to use their model. Because Generative AI is intentless, meaning you don’t have to explicitly set up a list of questions it can answer, many believe it is a way to hyper-scale the outputs an AI chatbot can support.

The big challenge with using Gen AI to power an enterprise chatbot is that you can’t train it on your own enterprise data, because the training passes are so expensive. The most effective strategy to get around this limitation involves the RAG (Retrieve, Augment, Generate) method. This approach initially retrieves pertinent data from your enterprise databases or other sources. Subsequently, it enriches the Large Language Model (LLM) prompt with this information, aiming to guide the model towards generating responses that are not only relevant but also confined within the parameters of the enriched prompt. You’re effectively asking the LLM, “Considering the huge public dataset you’ve already been trained on, but also considering this small private dataset I’m giving you now, please answer this question.” This technique ensures more targeted and accurate output, leveraging the strengths of LLMs while maintaining cost-effectiveness.

Misconception #1: Generative AI can answer any question flawlessly

The belief that Generative AI is infallible is the first fallacy. Gen AI isn’t concerned about being right or wrong. It is just generating word sequences based on patterns in the data it is trained on or prompted with. Because that data is human-derived and humans are imperfect, Gen AI is equally imperfect. Therefore, should we accept this imperfection? AI is a means for automating what humans do, so if the AI is imperfect, but less so than the humans, does it matter? If the AI produces a better outcome more often, does it have to be perfect?

The challenge lies in the lack of administrative control around what is produced coupled with the tone of answers giving the impression of confidence, even when incorrect. The AI doesn’t actually understand either its own training data, or the questions it is asked; even so, it has been trained to generate confident, authoritative-sounding answers. It can therefore generate convincing answers that are spectacularly, even dangerously wrong. These are referred to as hallucinations.

Misconception #2: Generative AI is plug ‘n play

ChatGPT is based on public knowledge like Wikipedia and took years of training with thousands of human hours in supervision. Using a public model like GPT, or even the enterprise edition of a Large Language Model, doesn’t simply just plug in and work. Despite the marketing shine, Generative AI does not operate independently with its own intentions. These large models are tools that operate within the constraints set by their developers and the data they were trained on. When used in the enterprise, that data needs to extend well beyond Wikipedia to things like HR records, policy documents, procedures and more. Who identifies, curates, maintains, and updates this data and ensures it is timely, error-free and high-quality? That is right, you do.

While Generative AI can produce wonderful results, it is not without human effort to implement and maintain.

Misconception #3: Generative AI understands better

Generative AI-based chatbots are often compared to older, poorly-trained AI chatbots without generative capabilities. People often believe that generative AI truly ‘understands’ the content it generates. In reality, these models don’t understand content in the human sense. They analyze patterns in data and replicate these patterns to generate new content. In other words, Gen AI is just rephrasing your existing, human-written policy, not truly coming up with unique answers. If your policies have gaps, errors or omissions, then the answers generated will be equally bad.

There are no shortcuts when your data/knowledge quality is poor.

Misconception #4: Generative AI can easily be used in the enterprise

Incorporating Generative AI into enterprise settings is not without its challenges. Issues of privacy, security, and the risk of data leakage loom large. Solutions involve dedicated, isolated models that are considerably more costly, raising questions about practical widespread enterprise use.

The solutions to these concerns are currently 1) training your own model in your own tenancy (exponentially more costly), 2) fine-tuning an existing LLM (also very costly though less so than #1) and 3) using a RAG method where enterprise data is piped into the process via prompts (though questions of accuracy and data leakage persist).

Further, the answers generated are not guaranteed to be accurate. While there are techniques to guardrail the responses, they don’t perform at 100%. Are there unknown liabilities of generating answers that may, at times, be inaccurate? As of this post (Nov 2023), one such issue was found by Gregory Kamradt which shows GPT4 with 128k context not being able to deliver answers based on the facts in large policies (which are quite common for big enterprises). https://www.linkedin.com/feed/update/urn:li:activity:7128720460170625025/

The data security, the costs, and the unknown liabilities all make using Generative AI in the enterprise layered and complicated.

Misconception #5: Generative AI is a machine, therefore without bias

The impact of data bias is often underestimated. Generative AI can—and often does—propagate the biases present in its training data, leading to outputs that may reinforce existing prejudices. Leveraging an existing large model will be based on a large dataset usually from public sources such as Wikipedia, Reddit, etc. Because that data was originally created by humans, their bias can be embedded in the data and influence the output of Gen AI. To be fair, talking to a human also results in bias, so this doesn’t mean avoiding Gen AI is the answer. Rather, one just needs to be aware of the risks of building their own model vs. using someone else’s model.

The dilemma lies in whether to use a pre-trained model, with its inherent biases, or invest in developing a bespoke model, often at prohibitive expense.

Misconception #6: Generative AI can learn and improve on its own

A common myth is that AI can autonomously learn and improve post-training. In truth, without new data or retraining, an AI’s knowledge becomes stagnant. Continuous human intervention is necessary to update and refine these models. Even using a RAG method with Gen AI requires the humans to feed the AI updated information in order to improve outcomes.

There are no shortcuts or magic in AI. It’s all about timely, high-quality, evolving data.

Misconception #7: Generative AI is affordable

Text-based Generative AI depends on Large Language Models. In the case of GPT, those models take months to train and can use $100M in compute power. Every call to an LLM like GPT is metered by a few pennies per a bundle of tokens. A token is basically the equivalent of a 5-character word. Every word input and output gets charged, but also the entire history of the conversation is reposted during each subsequent message, meaning longer conversations incur the initial cost of knowledge seeding at every press of the enter key. A recent study by Gideon Taylor found that using GPT4-turbo as the exclusive engine for an enterprise chatbot would result in 4x-10x the cost of current methods. At that price point, most organizations won’t be rolling out pure LLM-based enterprise chatbots.

The surgical use of LLMs inside a bot is a safer and far less expensive approach, considering where we currently are in the Gen AI hype cycle.

Conclusion

Generative AI is undeniably transformative. It is imperative, though, to navigate its landscape with a clear-eyed view of its capabilities and limitations. At Gideon Taylor, we integrate these technologies with prudence, advocating for the adoption of current, reliable AI solutions while staying abreast of evolving models. Should you act now to leverage the enterprise value of AI? Yes. Will Gen AI costs decrease and functionally expand in the future? Yes. To profit from AI now without locking your enterprise out of growing Gen AI opportunity, it is paramount to partner with an AI provider who is growing with the AI landscape, and will help you leverage the practical power of AI without succumbing to or being paralyzed by the hyperbole.

In our next post we will examine how traditional AI works compared to Generative AI and what it takes to implement and manage both. Should you have any thoughts or questions, we would love to hear from you! Just click the Contact Us button below.